Understanding Large Language Model Behaviors through

Interactive Counterfactual Generation and Analysis

Abstract

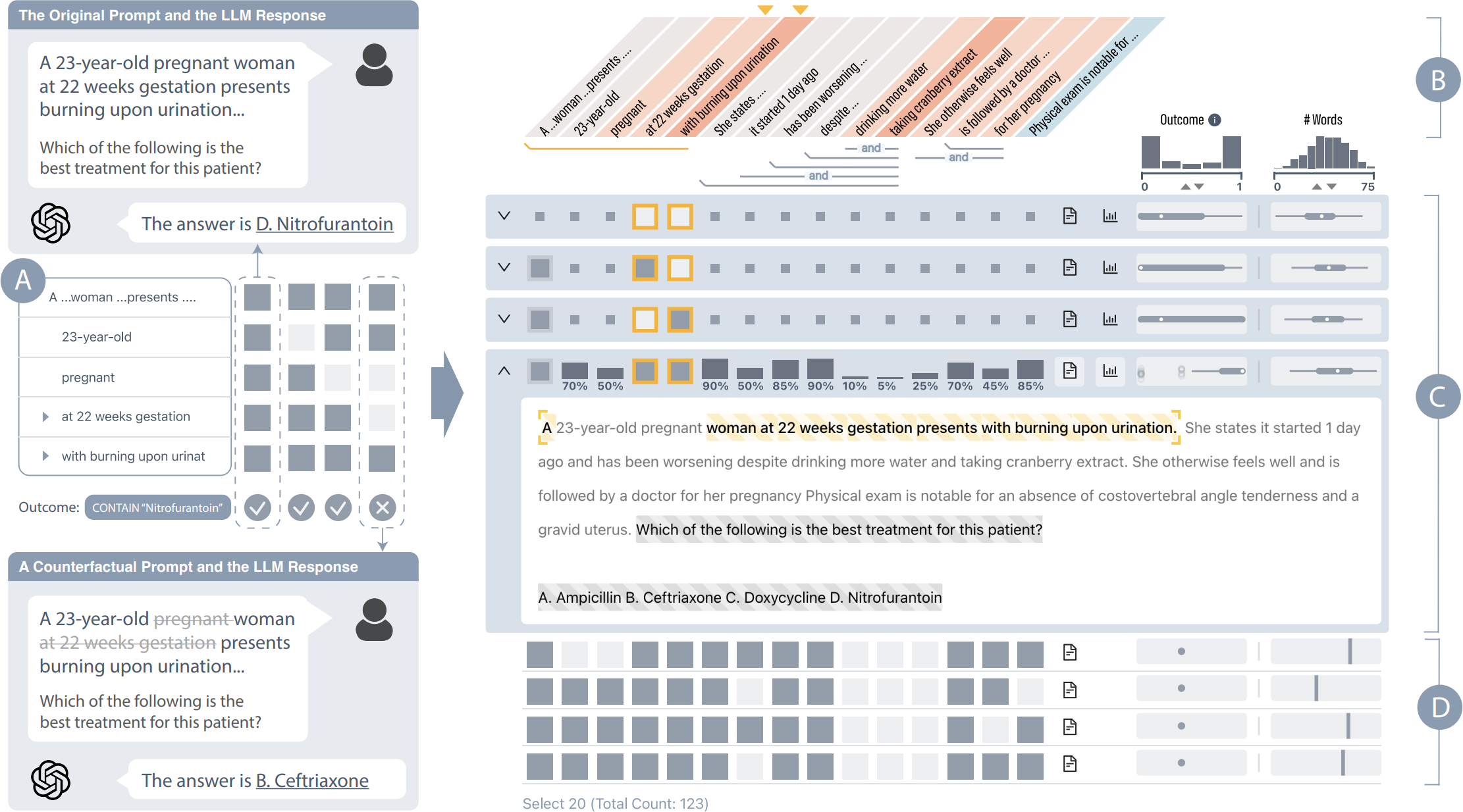

Understanding the behavior of large language models (LLMs) is crucial for ensuring their safe and reliable use. However, existing explainable AI (XAI) methods for LLMs primarily rely on word-level explanations, which are often computationally inefficient and misaligned with human reasoning processes. Moreover, these methods often treat explanation as a one-time output, overlooking its inherently interactive and iterative nature. In this paper, we present LLM Analyzer, an interactive visualization system that addresses these limitations by enabling intuitive and efficient exploration of LLM behaviors through counterfactual analysis. Our system features a novel algorithm that generates fluent and semantically meaningful counterfactuals via targeted removal and replacement operations at user-defined levels of granularity. These counterfactuals are used to compute feature attribution scores, which are then integrated with concrete examples in a table-based visualization, supporting dynamic analysis of model behavior. A user study with LLM practitioners and interviews with experts demonstrate the system's usability and effectiveness, emphasizing the importance of involving humans in the explanation process as active participants rather than passive recipients.